All published articles of this journal are available on ScienceDirect.

Bottom-up Retinotopic Organization Supports Top-down Mental Imagery

Abstract

Finding a path between locations is a routine task in daily life. Mental navigation is often used to plan a route to a destination that is not visible from the current location. We first used functional magnetic resonance imaging (fMRI) and surface-based averaging methods to find high-level brain regions involved in imagined navigation between locations in a building very familiar to each participant. This revealed a mental navigation network that includes the precuneus, retrosplenial cortex (RSC), parahippocampal place area (PPA), occipital place area (OPA), supplementary motor area (SMA), premotor cortex, and areas along the medial and anterior intraparietal sulcus. We then visualized retinotopic maps in the entire cortex using wide-field, natural scene stimuli in a separate set of fMRI experiments. This revealed five distinct visual streams or ‘fingers’ that extend anteriorly into middle temporal, superior parietal, medial parietal, retrosplenial and ventral occipitotemporal cortex. By using spherical morphing to overlap these two data sets, we showed that the mental navigation network primarily occupies areas that also contain retinotopic maps. Specifically, scene-selective regions RSC, PPA and OPA have a common emphasis on the far periphery of the upper visual field. These results suggest that bottom-up retinotopic organization may help to efficiently encode scene and location information in an eye-centered reference frame for top-down, internally generated mental navigation. This study pushes the border of visual cortex further anterior than was initially expected.

INTRODUCTION

A large portion of modern human activity takes place in man-made structures such as residential, commercial, and public buildings. A common daily routine is to walk between locations in a building. This seemingly trivial task involves finding a route from the current location to the destination, and monitoring and verifying expected surroundings along the route. The former requires allocentric (world-centered) representations of locations and directions in a building, while the later requires egocentric (body-centered) representations of the scenes expected during self-motion through the immediate environment [1, 2]. Brain regions that support large-scale navigation between places have been revealed by neuropsychological and functional neuroimaging studies in humans [1-8]. The hippocampus, parahippocampal cortex, and retrosplenial cortex (RSC) play essential roles in forming and recalling topographical representations of locations, directions, and routes, often referred to as a cognitive map [1-6]. The parahippocampal place area (PPA) and occipital place area (OPA) (previously termed transverse occipital sulcus [TOS]) are activated by the scenes and layout of the local environment [7-10]. The precuneus is important for visuospatial imagery, episodic memory, and mental navigation [11, 12].

Although the aforementioned brain regions are known to be involved in different aspects of visual spatial processing, their internal neuronal organization is less clear [13]. A recent study revealed that the posterior parahippocampal cortex (PHC) contains two retinotopic maps (PHC-1 and PHC-2) that overlap with the functionally defined PPA [14]. Another study showed that OPA (TOS) overlaps with retinotopic areas V7, V3B, and LO-1 [15]. Finally, RSC is located adjacent to the periphery of V1 and V2, but it has not been proven to be retinotopic in humans [13, 15]. Based on recent monkey studies showing a topographic map in prostriata located beyond the peripheral borders of V1 and V2 [16, 17], we hypothesize that human RSC contains retinotopic representation of the far periphery. The search for retinotopic maps in human RSC has previously failed probably because the maximum stimulus dimension used in most retinotopic mapping studies is limited to 10–20º eccentricity. A few recent studies have probed higher eccentricities using flickering checkerboard stimuli [18-20], but they have not yet clearly shown retinotopic organization in human RSC.

To determine to what extent high-level cortical areas involved in navigation are retinotopic, we used functional magnetic resonance imaging (fMRI) to localize brain regions involved in a mental navigation task (top-down) and then used wide-field natural scene stimuli to determine whether these regions are organized retinotopically (bottom-up). Mental imagery is often used to plan a route to a destination that is not visible from the current location [21-23] so we scanned subjects while they periodically imagined walking to out-of-sight goal locations from a current resting location in a familiar building. Then in retinotopic mapping experiments, subjects were scanned while they directly viewed wide-field videos of natural scenes masked in a rotating wedge or in an expanding (or contracting) ring. High resolution surface-based group-averaging methods (spherical morphing driven by a sulcus measure) were used to obtain average activation maps for each experiment and then to precisely overlay the results of the two experiments on a single cortical surface. Retinotopic organization was then analyzed in surface-based regions of interests (sROIs) selected from the imagined navigation experiment, including RSC, PPA, OPA, precuneus, and posterior parietal cortex.

MATERIALS AND METHODOLOGY

Subjects

A total of thirty healthy subjects (14 males, 16 females; 19–48 years old; mean = 26.2, SD = 7.2) with normal or corrected-to-normal vision participated in this study. Ten subjects participated in the imagined navigation experiment. Twenty-four subjects participated in retinotopic mapping experiments. Four subjects participated in both. All subjects gave informed consent according to protocols approved by the Human Research Protections Program at the University of California, San Diego (UCSD).

Imagined Navigation Experiment

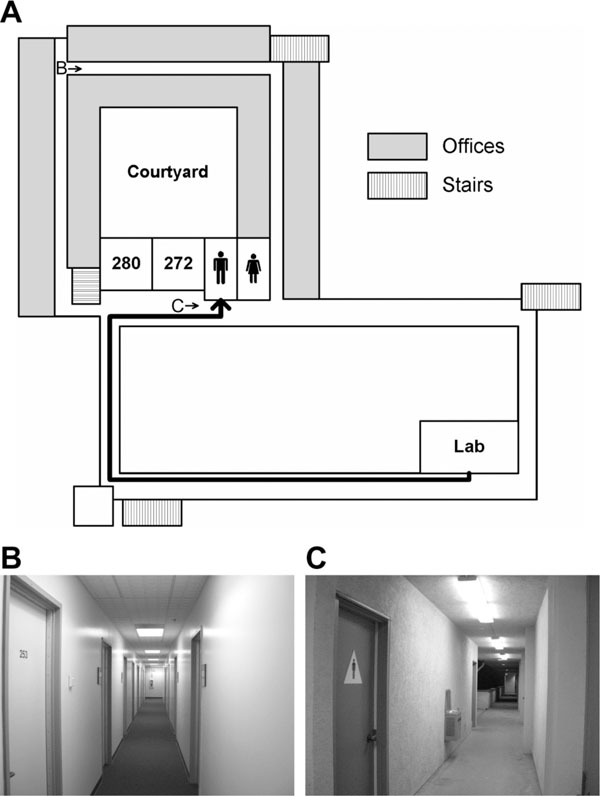

To localize brain regions involved in mental navigation, ten subjects (7 males, 3 females) who had occupied lab and office spaces for more than a year in the Cognitive Science Building (CSB) at UCSD participated in an imagined navigation experiment. Each subject participated in four 256-s scans of a two-condition block-design paradigm, which compared 16 s of imagined navigation from a current resting location to a target location in CSB (e.g., ‘walk to restroom’ from their respective labs) with 16 s of imagined rest at the target location (e.g., ‘stay at restroom’) (Fig. 1). This simple task has been shown to effectively activate brain regions that play important roles in navigation [21-23]. A 2-s verbal instruction was delivered at the beginning of each 16-s block. Subjects closed their eyes in complete darkness and listened to verbal instructions via MR-compatible headphones (Resonance Technology, Northridge, CA, USA). Nine familiar locations (mailbox room, courtyard, kitchen, restroom, room 003, room 180, room 272, ‘my lab,’ and ‘my office’) distributed across three floors of the building were randomized within and across scans. To ensure that subjects had an accurate cognitive map of the building, they were given a few pairs of current-target locations and were asked to verbally report both the route taken and scenes encountered along the route before they entered the MRI scanner. The next target location was always different from the current location. Subjects were informed that they were in their respective offices (initial location) when a new scan started. To avoid idling during periods of imagined navigation, subjects were asked to take a longer route to maintain an active status of self-motion if the direct distance between two locations was too short and took significantly less than 14 s. On debriefing, subjects reported that an alternate route was only very rarely required.

Imagined navigation in a building. (A) A schematic (not drawn to scale) of the second floor in the Cognitive Science Building at UCSD. The thick black trajectory indicates a route between a lab space and a restroom. (B) and (C) Sample scenes at locations B (hallway) and C (restroom) on the map in (A).

Retinotopic Mapping Experiments

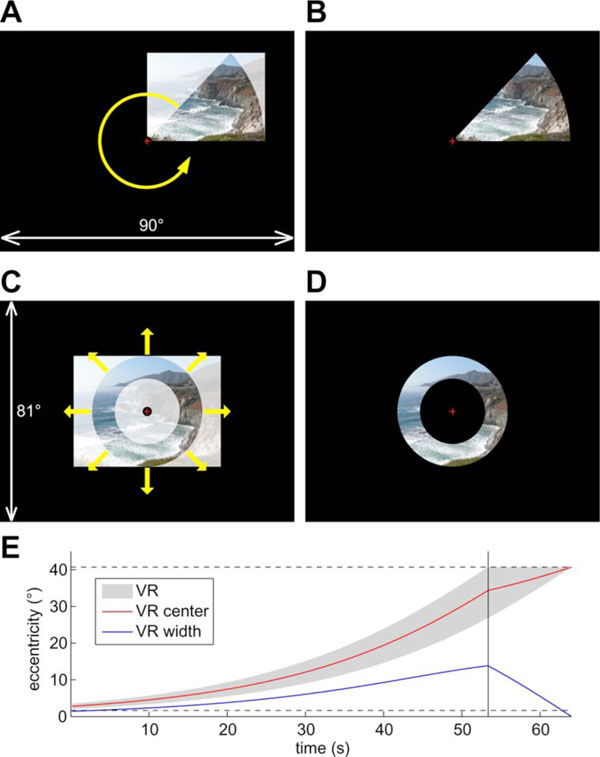

Twenty-four subjects (11 males, 13 females) participated in six 512-s scans while they watched wide-field, natural scene videos [24-27] (episodes of the television series Xena: Warrior Princess, Universal Studios, CA, USA) masked in a rotating wedge (45º angle) or an expanding (or contracting) ring (Fig. 2). The paradigms and stimuli were similar to those used in previous retinotopic mapping studies [28] except that the flickering checkerboard patterns were replaced with live videos in this study. The rationales for using wide-field moving natural scene stimuli as opposed to checkerboards are: (1) natural stimuli are more likely to attract attention, (2) their complex content more effectively activates both low- and high-level visual areas, and (3) television cameras fixate, pan, and track movements in a manner that closely approximates ego-centric views during real-life navigation. Real-time stimuli were generated on an SGI O2 workstation (Silicon Graphics Inc., Fremont, CA) with an analog video input card. In-house OpenGL programs (by M.I.S.) using SGI’s C++ VideoKit took live-feed 60-Hz NTSC (National Television System Committee) input frames from a VHS tape player to generate the masked videos. In four polar-angle scans, the wedge rotated around a central fixation cross at a 64-s period (8 cycles/scan), with two scans in the counterclockwise direction and two in the clockwise direction. In one of two eccentricity scans the ring expanded exponentially from the parafoveal region (~2º) to the far periphery (40.7º) in each 64-s period, and it contracted in the opposite direction in the other scan (Fig. 2E). Subjects listened (through ear plugs) to continuous soundtracks of the video via MR-compatible headphones (Resonance Technology, Northridge, CA) and they were required to fixate at a central cross, attend to the masked videos, and attempt to follow the story during each scan.

Wide-field natural scene stimuli. (A) Creating stimuli for polar angle mapping. A frame is scaled to and masked by a rotating wedge. The picture (by R.-S.H.) is replaced by continuous video during fMRI experiments. (B) Example of resulting polar-angle stimuli. (C) Creating stimuli for eccentricity mapping. A frame is scaled to the outer perimeter and masked by an expanding ring. (D) Example of resulting eccentricity stimuli. (E) Time course of visible range (VR) of the expanding ring. Horizontal dashed lines indicate the extent of stimuli (~2°–40.7°). The vertical line indicates the time when the ring reaches the maximum eccentricity and starts to disappear.

Subjects lay supine over a thin layer of padding on the scanner bed with their heads tilted forward (~30º) by foam pads so that they could directly view the stimuli on a screen without a mirror. Additional foam padding was inserted into the head coil to minimize head movements. A semicircular back-projection screen (by R.-S.H.) was designed to take up the entire space between the ceiling of the scanner bore and the subject’s chest. Subjects were instructed to attach the screen to the ceiling with Velcro after they were placed at the center of the scanner. Subjects adjusted the screen to a distance of ~12.5 cm (measured by a tape ruler) in front of their eyes so that they could comfortably fixate and focus on the central fixation cross without blurring or double vision. Stimuli were projected from the console room through a wave guide onto a ~25×21 cm2 screen using an XGA (1024×768 pixels) video projector (NEC, Japan) whose standard lens had been replaced with a 7.38–12.3 foot focal length XtraBright zoom lens (Buhl Optical, Pittsburgh, PA). The direct-view setup resulted in a field-of-view (FOV) of ~90×81.4º (~45º horizontal×40.7º vertical eccentricity).

Image Acquisition

Subjects were scanned with 8-channel head coils in 3-T GE MRI scanners in the Center for Functional MRI at UCSD. Functional images were acquired in multiple 256-s or 512-s scans with the following parameters: single-shot echo-planar images; flip angle = 90º; TE = 30 ms; TR = 2000 ms; FOV = 20 cm; voxel size = 3.125×3.125 mm2 in-plane; slice thickness = 3.5–4.0 mm; number of axial slices = 31; matrix = 64×64; 128 or 256 repetitions (TR) per scan (after discarding four dummy TRs). An alignment scan (FSPGR; FOV = 25.6 cm; voxel size = 1×1×1.3 mm3; matrix = 256×256; 106 axial slices) was acquired with the same block center and orientation as the functional images. In a different session, two sets of structural images (FSPGR; FOV = 25.6 cm; voxel size = 1×1×1 mm3; matrix = 256×256; 160–170 axial slices) were acquired and averaged to make cortical surface reconstructions using FreeSurfer [29, 30].

Data Analysis

For each session, functional images were motion-corrected and registered to a single functional reference volume using the 3dvolreg tool of AFNI package (available at http://afni.nimh.nih.gov/afni). Functional images were then registered with each subject’s cortical surfaces using a transformation matrix initially obtained by registering the alignment scan to the surface-reconstruction scans, and then manually adjusting it for an exact functional/structural overlay (especially critical for surface-based methods). Voxel-wise time averages were obtained across four scans of the imagined navigation experiment and across scans with stimuli moving in the same direction for the retinotopic mapping experiment (after time-reversing clockwise-rotation and contracting-ring scans). Standard ‘phase-encoded’ analysis methods were applied to retinotopic mapping data [26-28]. A Fourier spectrum was computed for each voxel after removing the linear trends of its time course. A first-level F-ratio statistic was computed by comparing the power at the stimulus frequency (8 cycles/scan) to the average power at the remaining frequencies (excluding the ultra-low frequencies and harmonics) in the Fourier spectrum. A first-level p-value was then estimated by considering the degrees of freedom (df) of signal (df = 2) and noise (df = 102 for 128 TRs; df = 230 for 256 TRs) in each F-ratio.

To obtain cross-subject average activation maps, individual subject surfaces were first morphed into alignment with an icosahedral target [30]. This generates a higher resolution cross-subject alignment for the cortex than the conventional 3-D Talairach averaging methods do. The morphed surface was then used to sample individual surface-based statistics into a common spherical coordinate system using the mri_surf2surf tool of FreeSurfer. At each vertex, complex-valued data was vector-averaged across subjects. Each vector-averaged complex number was then back-sampled onto the corresponding vertices of a representative cortical surface using FreeSurfer (Figs. 3, 4) [31-35]. A two-tailed t-test was used to assess if the group average amplitude was significantly different from zero (no signal). Finally, surface-based cluster exclusion methods were used to correct for multiple comparisons of statistics on the average sphere [32-34].

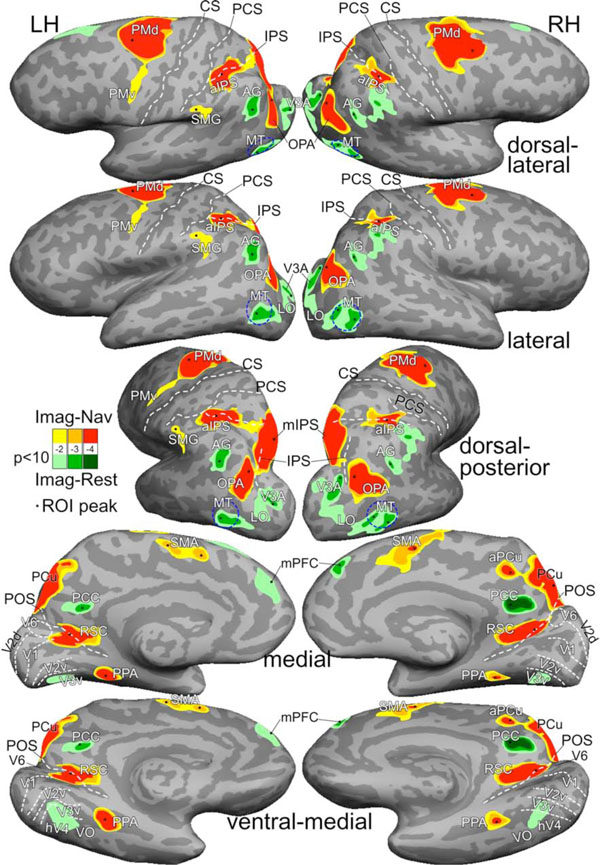

Average activation maps of imagined navigation (Imag-Nav) and imagined rest (Imag-Rest). Activations were rendered with three statistical levels at F(2,102) = 4.8, p<0.01; F(2,102) = 7.4, p<0.001; and F(2,102) = 10.1, p<0.0001; all masked by t(9) = 2.26, p<0.05, cluster corrected. See Table 1 for Talairach coordinates of ROI peaks. Abbreviations: LH/RH, left/right hemispheres; CS, central sulcus; PCS, postcentral sulcus; IPS, intraparietal sulcus; POS, parieto-occipital sulcus; PMd/PMv, dorsal/ventral premotor; SMG, supramarginal gyrus; aPCu, anterior precuneus. Other abbreviations as in text.

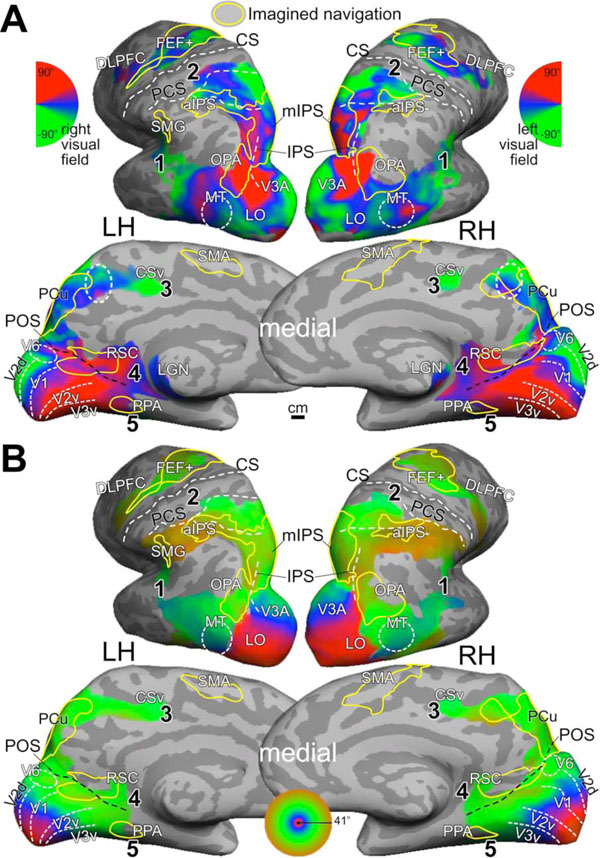

Mental navigation network overlaps with retinotopic maps. (A) Average polar-angle map. The dashed circle in anterior precuneus indicates a complete retinotopic map. (B) Average eccentricity map. Both maps were rendered at F(2,230) = 3.04, p<0.05 and masked by t(23) = 2.07, p<0.05, cluster corrected. Yellow contours indicate regions activated during imagined navigation in Fig. (3) (F(2,102) = 4.82, p<0.01; masked by t(9) = 2.26, p<0.05, cluster corrected). The numbers in bold type indicate visual streams mentioned in text.

Retinotopic Organization in Selected sROIs

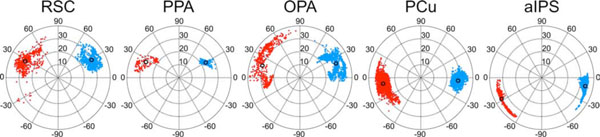

Five surface-based regions of interests (sROIs) including RSC, PPA, OPA, precuneus (PCu), and anterior intraparietal sulcus (aIPS) were selected from the average activation map of the imagined navigation experiment (Fig. 3). In each sROI, the percentage of vertices showing significant activations (p<0.05, cluster corrected) on both polar-angle and eccentricity group-average maps was measured on the cortical surface (Fig. 4). The visual field representation [14] at each vertex was estimated by combining the corresponding complex numbers from polar-angle and eccentricity maps into polar coordinates for the estimated local receptive field center (Fig. 5). The center (mean) and standard deviation of each cluster of contralateral hemifield representation on the polar plot were then estimated for each hemisphere.

Retinotopic organization in mental navigation network. Each polar plot shows the distribution of combined polar-angle and eccentricity representations in each sROI in Fig. (3) (for quantitative measures, see Tables 1, 2). Red/blue dots, right/left hemispheres; Black circles, cluster centers.

RESULTS

Imagined Navigation and Rest

Imagined navigation between familiar locations in a building activated a network of cortical regions bilaterally on the group average map (N = 10; Fig. 3, Table 1), including the dorsal premotor cortex (PMd), supplementary motor area (SMA), anterior intraparietal sulcus (aIPS), medial intraparietal sulcus (mIPS) extending into the precuneus (PCu), OPA, posterior and anterior banks of parieto-occipital sulcus (POS) into RSC, and PPA in the collateral sulcus. These brain regions are largely consistent with the results in other neuroimaging studies of mental navigation on real-world routes [6, 21-23].

Statistical Significance and Talairach Coordinates of ROI Peaks in Imagined Navigation Experiment

| sROIs | Left hemisphere | Right hemisphere | ||||||

|---|---|---|---|---|---|---|---|---|

| (x, y, z) | Vertices | F(2,102) | p | (x, y, z) | Vertices | F(2,102) | p | |

| mIPS/PCu | (-12, -83, 49) | 3484 | 17.6 | 2.7 × 10-7 | (16, -74, 47) | 3419 | 14.9 | 2.2 × 10-6 |

| PMd | (-19, 1, 44) | 2845 | 16.1 | 8.6 × 10-7 | (34, 4, 48) | 3184 | 13.9 | 4.4 × 10-6 |

| OPA | (-32, -87, 28) | 1297 | 14.9 | 2.1 × 10-6 | (39, -83, 26) | 1880 | 13.3 | 7.1 × 10-6 |

| aIPS | (-28, -46, 36) | 1627 | 13.5 | 6.1 × 10-6 | (41, -42, 35) | 1265 | 8.6 | 3.6 × 10-4 |

| PPA | (-32, -43, -5) | 537 | 11.3 | 3.8 × 10-5 | (37, -42, -9) | 360 | 7.3 | 1.1 × 10-3 |

| RSC | (-19, -55, 14) | 1303 | 10.3 | 8.2 × 10-5 | (21, -57, 20) | 1037 | 16.7 | 5.3 × 10-7 |

| aPCu | (7, -45, 45) | 283 | 8.4 | 4.1 × 10-4 | ||||

| SMA | (-6, 0, 51) | 548 | 6.8 | 1.7 × 10-3 | (7, 4, 48) | 1108 | 7.2 | 1.2 × 10-3 |

| SMA* | (-6, 16, 41) | |||||||

| SMG | (-61, -31, 44) | 581 | 6.0 | 3.4 × 10-3 | ||||

| AG | (-34, -60, 34) | 571 | 6.9 | 1.5 × 10-3 | (47, -56, 34) | 385 | 6.1 | 3.2 × 10-3 |

| MT | (-45, -79, 1) | 825 | 6.5 | 2.1 × 10-3 | (50, -69, -6) | 1244 | 6.6 | 2.0 × 10-3 |

| MT* | (48, -77, 1) | |||||||

| PCC | (-6, -53, 26) | 294 | 6.2 | 2.8 × 10-3 | (8, -51, 25) | 593 | 9.2 | 2.1 × 10-4 |

| V3A | (-28, -96, 19) | 808 | 6.0 | 3.3 × 10-3 | (32, -84, 21) | 1221 | 6.0 | 3.3 × 10-3 |

| mPFC | (-4, 60, 19) | 577 | 5.6 | 5.1 × 10-3 | (5, 50, 36) | 417 | 7.2 | 1.2 × 10-3 |

Note: The F-statistic values were averaged across vertices within the contour of p<0.01 in each sROI. The first and second sections indicate sROIs with activation and deactivation, respectively.

* indicates the second peak in some sROIs. Abbreviations as in Fig. 3 and text.

Several cortical regions were activated while the subject imagined resting at target locations (green regions in Fig. 3). An equivalent interpretation is that they were ‘deactivated’ by imagined navigation. These regions include the posterior cingulate cortex (PCC), angular gyrus (AG), and medial prefrontal cortex (mPFC), which overlap with a subset of the default mode network [25, 36]. Additional regions activated during imagined rest (or equivalently, deactivated by imagined navigation) include areas V3A, lateral occipital (LO) cortex, middle temporal (MT) area, and an area overlapping with V3v, hV4, and ventral occipital (VO) area on the ventral surface.

Five Visual Streams

Group-average retinotopic maps (N = 24) of polar-angle and eccentricity representations are rendered on the same subject’s cortical surfaces as in Fig. (3). Fig. (4) shows that retinotopic maps branch out anteriorly from the occipital pole into five visual streams: (1) one extends through area MT into the superior temporal sulcus and reaches the posterior lateral sulcus, (2) one stretches along the intraparietal sulcus and arrives at the superior part of the postcentral sulcus near VIP+ [26], (3) one spreads across the POS into the medial posterior parietal cortex and precuneus, ending at the cingulate sulcus visual (CSv) area [37], (4) one runs across the POS into RSC at the isthmus of cingulate gyrus (iCG) and continues to the edge of cortex just under the splenium of the corpus callosum (the discontinuous patch of retinotopic activation in the thalamus includes the pulvinar and the lateral geniculate nucleus [LGN] [38]), and finally, (5) one follows the collateral sulcus and fusiform gyrus into the ventral occipitotemporal lobe. A disconnected set of retinotopic maps, including the frontal eye fields (FEF+) and dorsal lateral prefrontal cortex (DLPFC) [32, 34, 39], is found in the frontal cortex. By overlaying contours of brain regions activated by imagined navigation (Fig. 3) on retinotopic maps (Fig. 4), it is obvious that the mental navigation network largely overlaps with higher-level retinotopic areas.

Retinotopic Organization in Mental Navigation Network sROIs

Fig. (5) shows distributions of visual hemifield representations in five selected surface-based ROIs defined in the imagined navigation experiment. Scene-selective regions including RSC, PPA, and OPA show varying degrees of overlap with retinotopic areas that have a common emphasis on the far periphery of the upper visual field (Table 2). Two other higher-level sROIs, aIPS and PCu, overlap with retinotopic areas predominantly representing the horizontal to lower visual field of the far periphery. Finally, the mental navigation network overlaps with multiple retinotopic areas along the medial/posterior intraparietal sulcus and in frontal eye fields that are known to play important roles in visual spatial attention [32, 34, 35, 39]. This last set of areas contains representations of most of the visual field.

Retinotopic Organization in Selected sROIs of the Mental Navigation Network.

| sROIs | Left hemisphere | Right hemisphere | ||||||

|---|---|---|---|---|---|---|---|---|

| Vertices | % | Polar angle (mean ± s.d. °) | Eccentricity (mean ± s.d. °) | Vertices % | % | Polar angle (mean ± s.d. °) | Eccentricity (mean ± s.d. °) | |

| RSC | 1303 | 70.5 | 27.8 ± 9.4 | 29.4 ± 3.4 | 1037 | 47.7 | 27.0 ±16.8 | 28.0 ± 3.8 |

| PPA | 537 | 48.8 | 29.6 ± 5.1 | 23.6 ± 1.9 | 360 | 35.6 | 25.6 ± 9.9 | 28.1 ± 4.7 |

| OPA | 1297 | 83.8 | 21.6 ± 14.0 | 29.8 ± 4.3 | 1880 | 51.9 | 18.0 ± 25.2 | 30.2 ± 4.8 |

| PCu | 1420 | 89.4 | -4.7 ± 7.1 | 28.7 ± 2.9 | 1823 | 97.1 | -9.1 ± 13.3 | 29.3 ± 2.8 |

| aIPS | 1627 | 37.5 | -11.1 ± 9.6 | 34.0 ± 1.6 | 1265 | 34.4 | -28.1 ± 11.1 | 34.9 ± 0.9 |

Note: For each sROI, the percentage indicates vertices activated on both polar-angle and eccentricity maps. For the purpose of these measurements, PCu refers only to the posterior precuneus region (yellow contour) visible on the medial wall in Fig. 4. s.d., standard deviation.

DISCUSSION

For many years, it was assumed that retinotopic organization is limited to the lower-level visual cortex, and that regions involved in higher-level cognitive functions are somehow more diffused or not topographically organized. Recent neuroimaging studies have begun to reveal retinotopic maps in the frontal, posterior parietal, middle temporal, and ventral occipitotemporal cortex in humans [14, 32, 34, 35, 39-41]. However, it has been unclear whether retinotopic organization extends into medial brain regions that play important roles in higher-level visual spatial processing including mental imagery, episodic memory, scene processing, and spatial navigation [1-8, 11, 12]. Furthermore, retinotopic organization is a bottom-up, sensory-driven principle that requires external stimuli be presented at a specific location in the visual field in order to activate the corresponding patch on the cortical surface. In contrast, mental navigation is a top-down, cognitive-driven process that is not activated by external sensory stimulation. Interestingly, our results show that top-down and bottom-up processes coexist in higher-order brain regions, notably in RSC. The finding of retinotopic (eye-centered) organization in RSC is consistent with its functions in translating visual information between egocentric (viewer-centered) and allocentric (world-centered) reference frames [4, 5].

Results from previous studies have suggested that other scene-selective regions PPA and OPA are located within or adjacent to retinotopic areas [13-15]. In this study, we showed that RSC, PPA and OPA have a common emphasis on the far periphery of the upper visual field (Fig. 5). The bias toward the upper peripheral visual field in PPA and OPA (TOS) is consistent with findings in the previous studies [14, 15, 42-44]. However, the current study differs from others in two ways. First, our results show RSC, PPA and OPA overlap with even more peripheral representations (~20–30º). Second, RSC, PPA and OPA were defined here by a mental navigation task without any external stimuli (e.g., picture of scenes). This may explain why the PPA defined in this study only partially overlaps retinotopic areas (49% and 36% in the left and right hemispheres, respectively) at a location near PHC-2 (anterior part of PPA) activated by real scenes in a previous study [14]. Similarly, RSC and OPA defined in this study turned out to be mostly retinotopic. In fact, retinotopy extends beyond the navigation-defined sROI of RSC (Fig. 4) into the isthmus of cingulate gyrus and reaches the edge of cortex just under the splenium of the corpus callosum. These results suggest that the bias toward upper, peripheral visual field representations in scene-selective regions may facilitate encoding of distant, above-horizon scenes (i.e., upper visual field) as well as immediate, surrounding structures (i.e., peripheral vision) during self-motion in large-scale environments. The concentration of retinotopy near the horizontal meridian in PCu (Fig. 5) may reflect the involvement of this area with landmarks near the horizon. Further studies are required to investigate subdivisions (retinotopic and non-retinotopic) as well as the possible functional implications of the biased visual field representations we have found in these regions.

We found that intermediate retinotopic visual areas like V3A, LO, MT, V3v, and hV4 were actually inhibited during the active phase of the imagined navigation task. Since it is likely that higher level retinotopic visual areas in superior and medial parietal cortex as well as PPA, RSC, and OPA receive input from these intermediate areas (based on non-human primate data), it appears that the brain actively suppresses them in the process of generating intrinsic activity in higher level areas, perhaps to avoid interference from bottom-up noise in areas normally strongly modulated by real external stimuli.

The visual system has traditionally been divided into two streams [45, 46]. The dorsal stream that processes information of object location and motion is also known as the ‘where’ or ‘how’ pathway. The ventral stream that plays an important role in pattern recognition (e.g., objects, faces, and scenes) is often labeled as the ‘what’ pathway. Alternative models hypothesize that each stream might be further subdivided functionally and anatomically [4, 47]. Here, we propose a five-stream hypothesis for the human visual system. As shown in our retinotopic maps, the dorsal stream can be further divided into three sub-streams. The middle temporal stream (#1 in Fig. 4) that extends into the superior temporal sulcus and then posterior lateral sulcus is specialized in local, structural, and biological motion. The superior parietal stream (#2 in Fig. 4) that extends into the anterior intraparietal sulcus and postcentral sulcus is important for encoding object locations in multiple reference frames and for visually guided actions in peripersonal space. These two streams are separated by the angular gyrus, a part of the default-mode network, in humans. The medial parietal stream (#3 in Fig. 4) that extends into the superior precuneus ending at the cingulate sulcus is involved in top-down mental imagery and contains bottom-up retinotopic maps (including a complete map of the contralateral hemifield as indicated by dashed circles on the medial wall in Fig. (4). The ventral stream can be further divided into two sub-streams. The retrosplenial stream (#4 in Fig. 4) that extends into the isthmus of cingulate gyrus plays important roles in spatial navigation and peripheral vision. This stream is separated from the medial parietal (superior precuneus) stream by PCC, another part of the default-mode network. The ventral occipitotemporal stream (#5 in Fig. 4) that extends into the fusiform gyrus is known to respond to objects, faces, and large-scale scenes. Among these five streams, the medial streams (#3 and #4 in Fig. 4) are less clear and future studies are required to investigate detailed retinotopic and functional organization in these regions.

CONCLUSION

We show that retinotopic organization branches into five visual streams distributed across the caudal half of the cortical surface. These streams extend into higher-order brain regions that are involved in cognitive functions including mental navigation. This suggests that retinotopy persists as the fundamental organizing principle throughout most of the broadly-defined visual cortex in humans.

CONFLICT OF INTEREST

None declared.

ACKNOWLEDGEMENTS

This study was supported by a grant (R01 MH081990) from the National Institute of Mental Health, USA, and a Royal Society Wolfson Research Merit Award, UK (M.I.S.). We thank Donald J. Hagler Jr. for developing surface-based group averaging methods, Ying C. Wu for recording verbal instructions, and Katie L. Holstein for help with the manuscript and illustrations.