Simulating Motion in Figurative Language Comprehension

Abstract

In this visual world eye tracking study we explored simulation of fictive motion during language comprehension in figurative sentences in Hindi. Eye movement measures suggest that language comprehenders gaze longer at visual scenes on hearing fictive motion sentences compared to their literal counterparts. The results support previous findings in English and provide cross linguistic evidence for the simulation and embodied views of language processing. We discuss the findings in the light of neuroimaging models and language vision interaction.

INTRODUCTION

Recent embodied and simulation based approaches to language comprehension predict that comprehension of language triggers mental simulation of events and objects as described in the language [1-4]. According to theories of embodied cognition [5], such simulation depends upon the mental representations that were formed during comprehenders’ actual perceptual experience and interaction with the environment. During processing of sentences, such mental representations become active and these could be seen in different motor movements like hand or the eye movements. These movements are fairly unconscious as well as involuntary. Measurement of these movements reveals moment-by-moment nature of language processing and the underlying cognitive mechanisms [6]. In this article we consider the processing of fictive motion sentences that show mental simulation during comprehension leading to changes in eye movement behavior and discuss the findings in the light of behavioral as well as neural models of language comprehension with particular emphasis on vision-language interaction.

In contrast to literal descriptions, figurative language is pervasive in all cultures and forms part of everyday discourse. Meaning in figurative language can be much complex as compared to straight forward nature of literal sentences. Metaphors and idioms for example have been widely studied in this respect [7]. Often figurative language use consists of assigning actions to agents that are not part of real world experience [8]. Consider, for example, a metaphorical sentence with an agent incapable of any motion: a sense of incapacity ran through his spine. In contrast to this situation, there are sentences, where agents are not abstract but at the same time are not capable of any motion themselves. Such agents can be paths, both travelable as well as non travelable, and when these agents are used as noun phrases along with action verbs, comprehenders simulate illusory motion [9]. This article highlights the study of motion simulation during comprehension of simple declarative sentences that are not metaphorical but figurative and contain fictive motion.

Motion verbs are pervasive in languages around the world that are used both in literal and non-literal sense with different types of noun phrases. Actual motion verbs such as go and run when used literally depict the physical displacement or implicit state change of the entity such as in the following sentence: the boy is running towards the valley and the bus goes along the coast [9]. Such literal usage of motion verbs implies change of state in a physical space, the passage of time and a path travelled. However, non- literal use of motion verbs do not entail any physical change of state, e. g. Theroad goes through the desert [10]. In this case, there is a perception of illusory motion associated with the agent leading to simulation [11].The use of motion verbs in non-literal context gives ‘feel’ or ‘essence’ of actual motion. Such implicit type of motion is called fictive motion [12].

Fictive motion therefore is an imagined type of motion which has received different labels such as an abstract motion [13] and subjective motion [14]. Construal of fictive motion expressions leads to building up of spatial mental representation of specific scenes described where the comprehender mentally scans and enacts motion in that spatial model [10]. Construal of both fictive motion sentences and non fictive or literal sentences leads to construction of spatial mental model [15] but the difference lies in the fact that fictive motion sentences evoke simulation of motion along the spatial map whereas its literal counterpart lacks it. Therefore, it is the fictive motion verb that adds temporal and dynamic dimensions to the static representation [16]. Fictive motion exerts its effect on the comprehension of abstract domains such as understanding of time and temporal reasoning. Most importantly, for our purpose, such simulation affects cognitive behavior such as changes in eye movement patterns and deployment of visual attention.

A previous study of motion simulation in fictive motion sentences in English [15, 17] found that subjects spent more time looking at named objects while they listened to the fictive motion versions of sentences as compared to non fictive versions. In this eye tracking study, objects were depicted both in horizontal as well as vertical versions. However, the most crucial finding was the fact that gaze durations were significantly higher in the fictive motion condition compared to the non fictive motion. This result was interpreted as language comprehension affecting motion simulation. In a recent study involving sentence reading, following learning of different bodily actions, it was observed that reading speed was enhanced when the action and the descriptions were congruent, suggesting a direct causal relation between language and action [18]. However, these studies have not thrown light on the exact cause behind motion simulation or which aspect of a sentence induces motion simulation. Apart from behavioral studies, there are evidences from brain imaging literature that show different cortical areas showing selective activity to motion simulation. It must be noted that in spite of many cross-linguistic classification and categorization of fictive motion phenomena in several languages, there are very few studies that have explored its psychological processing.

Brain imaging literature suggests that there are specific cortical areas in the brain that simulate such fictive motion during comprehension of figurative language. An fMRI study on fictive motion [19] found significant activation in the left posterior middle temporal gyrus and this effect was obtained because the hearer, while listening to the fictive motion sentences, scanned the depicted scenario egocentrically while applying motion. Another study employing fMRI [20] compared motion in abstract vs. concrete sentences. Their results indicated a strong bilateral posterior network activation including the fusiform/parahippocampal regions for sentence meaning involving motion in a concrete context. These evidences suggest that language use in humans is highly embodied and simulation of all types of knowledge associated with the language is biologically grounded and it leads to motor resonance. However, it must be noted that in spite of these brain imaging findings as well as behavioral demonstrations of motion simulation, so far a coherent account of figurative language processing is lacking.

In sum, processing fictive motion sentences has shown to cause simulation of motion in language comprehenders. This perception of illusory motion affects brain activations as well as other physiological measures like eye movements. The present study used such fictive motion sentences to study online interaction of language and vision in an eye tracking paradigm. Since motion simulation causes changes in eye movement behavior, it was predicted that subjects will look longer and allocate more visual attention to scenes while processing fictive motion sentences compared to literal ones. We further measured the time course of such simulation as would be indicated in consistent deployment of visual attention to the scene over a period of time.

In the present study we made use of the “visual world” eye tracking paradigm [21, 22] and presented picture displays along with spoken sentences and measured eye movements. We predicted that if subjects are simulating motion during comprehension of fictive motion sentences, then effect would be visible in eye movement measures i.e. longer gaze durations and total number of fixations. Moreover, Hindi is a typologically different language as compared to English and therefore it is important to understand if such simulation is language dependent or universal.

MATERIALS AND METHODS

Subjects

Forty participants (Mean age 23.4 years) from University of Allahabad participated in this eye tracking experiment. All were native Hindi speakers with normal and corrected-to-normal vision. Informed consent was obtained from each participant prior to the experiment. Subjects were naïve to the aim of the experiment.

Material

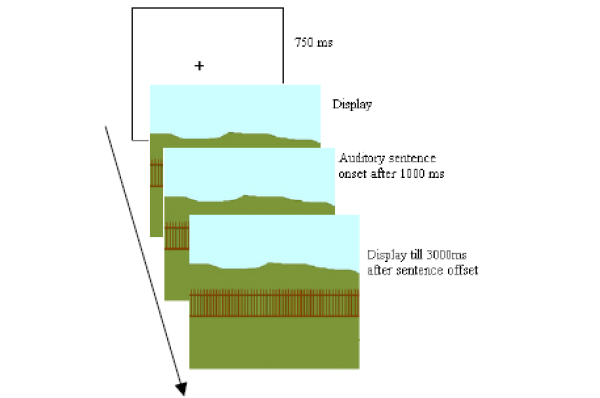

Thirty two experimental sentences were used with 16 displays in this experiment. Each sentence had a literal (NFM) and a figurative version (FM) (Appendix 1). Effort was made to keep the sentence lengths similar. Displays were of 1024x 768 resolutions and filled the entire screen area of a 17 inch color monitor. Further, 16 filler pictures were added along with the experimental items. The pictures depicted spatial objects having path which were either travelable (bridge, road) or non travelable (fence, trail of tree). We used an equal number of horizontal and vertical objects to keep any visual bias away. The displays closely matched any real depiction and were rated for their suitability. Fig. (1) shows typical experimental stimuli with the fictive and non fictive versions of sentences used along with the presentation sequence. For each picture, two auditory sentences were recorded which were Fictive motion sentences and another was its literal counterpart or non fictive motion sentences.

Time sequence for stimuli presentation along with the FM (Fictive Motion) and NFM (Non Fictive Motion) sentence types for that display.

Sentences were recorded on Goldwave from a female native speaker of Hindi in neutral intonation. The recordings were saved as wave –files sampled at rate of 4.41 k Hz mono channels. An additional 16 filler sentences were recorded for filler pictures. Example of a filler sentence is ye ladka kafi lamba hai This boy is very tall. Example of a fictive motion sentence is yeh pull nadi ke upar se hokar guzarta hai this bridge runs across the river and its non fictive counterpart was yeh pull nadi ke upar hai/ this bridge is over the river. In both the conditions same pictures were used. Furthermore same fillers were used in both the conditions. The mean duration of the fictive motion sentences was 4.4 msec. (SD=0.75) and those of non fictive motion sentences was 4.1msec. (SD=0.62). The difference in length was however not significant so as to affect durations of eye movements.

Picture displays and corresponding sentences (FM and NFM) were rated in which participants (N=15) were asked to judge for compatibility. We also did rating studies for semantic equivalence of FM and NFM sentences in the context of the picture. It was rated on a 5 point Likart scale where “5”indicated “highly compatible/match” and “1” as “highly incompatible/mismatch”. The selected stimuli had mean rating of 4.1(SD=0.41), 4.3(SD=0.37) for FM and non-Fm conditions respectively. Participants who took part in the ratings of pictures and sentences were not included in the main eye tracking study.

Apparatus

Participants were seated at a distance of 50 cm from a 17” color CRT monitor at 1024 x768 resolutions running at 75 Hz screen refresh rate. Participants’ eye movements were recorded by SMI High speed eye tracking system (Sensomotoric Instruments, Germany) with a sampling rate of 1250 Hz. Viewing was binocular but data from the right eye was used for analysis. The visual stimuli subtended approximately15 degree visual angle. Participants were instructed to keep head movement and eye blinks as minimum as possible.

Procedure

The experiment began with a calibration process that was automatic as participants looked at the fixation cross presented at 13 different locations on the monitor. The eye tracker accepted successfully calibrated points automatically. After successful calibration, the experimental trial began with a fixation cross at the centre of the screen for 750 ms followed by presentation of picture (Fig. 1) . After 1000 ms of the onset of picture, a spoken sentence was presented. Sentences were presented via speakers located on both sides of the monitor and equidistant from the subject. The picture remained on the screen till 3000 ms after the spoken sentence offset. Participants were given instruction (both verbally and written visual instruction) to look at the pictures carefully while listening to the spoken sentences. In order to ensure that participants were looking and listening attentively , at the end of each trial they were required to give a judgment task in response to the question whether the picture shown and the spoken sentences were compatible or not. However this was not used for any further analysis. They were supposed to press left arrow key in case they ‘agreed’ and right arrow key in case they ‘disagreed’. The next trial began only after participants had given the response. In all, there were 32 trials in each condition along with 16 filler items in a between-subject design where participants were randomly allocated to one or the other group. The order of presentation of stimuli was randomized for each participant. Each experimental session took about 12 -15 minutes to complete.

Data Analysis

The dependent eye movement variables measured were total number of fixations, average duration of fixations, number of entries and total gaze duration. Fixations of less than 100 ms duration were not included in the analysis. These variables were measured only within a pre- specified Area of Interest (AOI). Each picture had one AOI which included the noun phrase (trajector) with which fictive motion was associated. For example, in the sentence the road runs through the forest, the AOI was the road. AOIs were drawn manually enclosing the contours of the entity that the noun phrase referred to in the spoken sentence. Care was taken to confirm that areas of AOIs across stimuli remained same without compromising their natural representation.

RESULTS

Eye Movement Measures

We analyzed all the dependent variables for the total duration of picture display i.e. from picture onset to offset that was for 9-10 seconds. Participants fixated significantly more number of times in FM condition (M = 15.66, SD = 4.7) than NFM condition (M = 10.06, SD = 2.04), t (38) = 4.88, p < 0.001. The average duration of fixation in FM condition (M = 806.14, SD = 226.45) was significantly more compared to the NFM condition (M = 532.35, SD = 167.64), t (38) = 4.34, p < 0.001. We also measured total number of entries i.e. saccades to the AOI for the two conditions. Number of entries refers to number of times participants’ gaze entered the AOI and then moved out with or without fixating into that region. Eyes may move into a location without fixating indicating anticipatory mechanisms. However, the difference for both the conditions FM (M = 4.99, SD = 1.14) and NFM (M = 5.05, SD = 0.97), t (38) = 0.18, p > 0.05 was not significant. Total dwell or gaze duration is sum of duration of all the fixations in the given AOI. The analysis showed total gaze duration was significantly more for FM condition (M = 3619.15, SD = 845.3) than the NFM condition (M = 2591.07, SD = 677.11), t (38) = 4.25, p < 0.001.

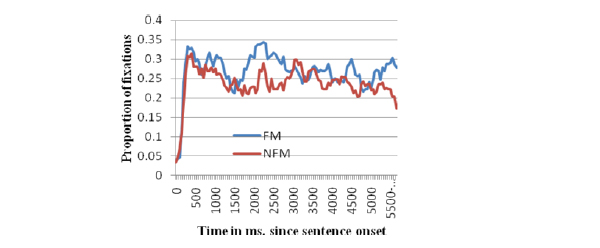

Time Course of Motion Simulation

We made the entire duration of the acoustic sentence into 20 ms time windows and plotted the proportions of fixations to the AOI as a function of sentence type. The time course of fixations shows a continuous deployment of visual attention to the depicted area during the course of the auditory sentence. This suggests simulation of motion in the figurative context compared to the literal sentence (Fig. 2)

Proportion of fixations to the AOI for FM and NFM conditions as a function of time. Time since onset of sentence till 6000 msec.

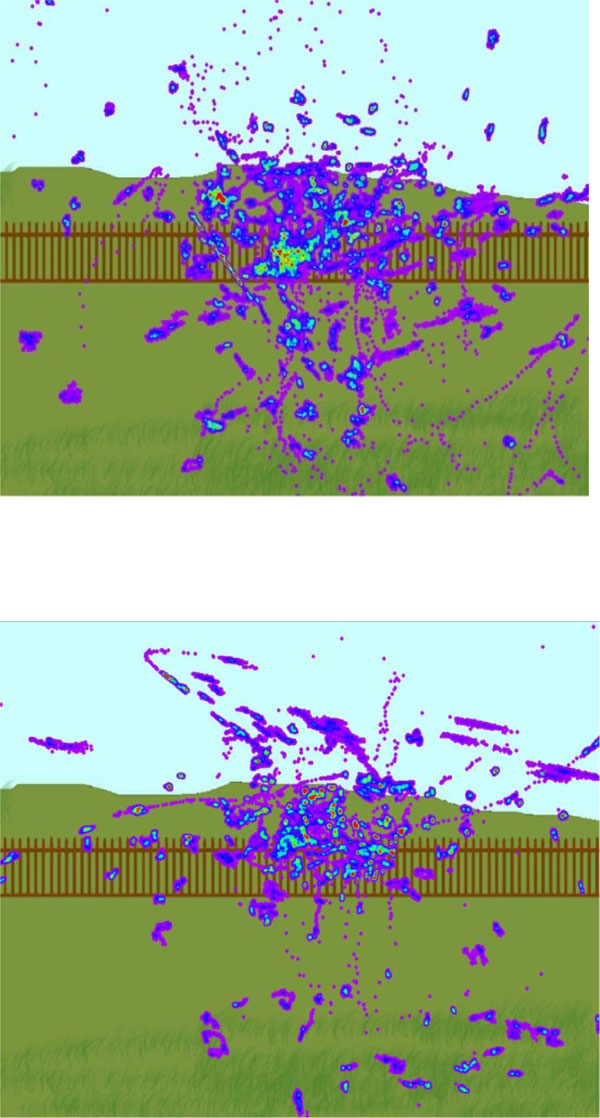

Dispersion of Visual Attention

Eye movement data provide direct measurement of overt visual attention. Eye movement scan paths show time bound shifts in visual attention during the course of cognitive processing. Fig. (3) shows dispersion of visual attention to the AOI for the fictive motion condition for an example stimulus for ten subjects (upper panel). The lower panel shows the same measurements for the non fictive sentence for that stimulus. It is fairly evident from the scan path that subjects scanned the entire path area when they listened to the fictive motion sentence and allocated more visual attention while it is not the case with the literal sentences. These visualizations of deployment of overt visual attention are valuable in understanding how subjects look at a picture and where they look most.

Attention maps for fictive motion (upper panel) and non fictive motion sentences (lower panel) on an example stimulus.

DISCUSSION

In the current study, we tried to explore simulation of motion caused by fictive motion expressions in Hindi language studying eye movements during scene perception. The results are consistent with previous studies on fictive motion [15,17]. We found significantly higher number of fixations, higher average duration of fixations as well as higher total gaze durations for the fictive motion sentences as compared to their literal counterpart. Our results provide evidence in support of motion simulation as triggered by the fictive motion in Hindi and therefore adds to the cross linguistic generalizability of the findings.

The higher number of fixations in fictive motion conditions reflect capture of attention and persistent visual perception by the spatial entities and the path depicted in the pictures as induced by the fictive motion verbs. It is interesting because the same visual stimulus is used in non fictive motion condition but fails to drive the number of times the participants fixate on the concerned AOIs. In this study, the only manipulation was in the spoken sentence which is literal counter part of fictive motion sentences so this shows the effect of fictive motion sentences on increased number of fixations and average gaze durations. We did not find any difference in the number of entries in the two conditions. This indicates that an almost equal number of saccades were launched into the AOIs in the two conditions irrespective of the nature of the sentence heard. This also supports the phenomena of simulation of fictive motion. It means that participants gaze entered and reentered into the concerned AOIs in both the conditions more or less the same number of time but fixated more only in the FM condition. Our results add further cross linguistic evidence to the perceptual motor accounts of language comprehension [23] and also to the prediction of simulation based theories of language processing. These results suggest that indeed participants mentally simulate motion for seemingly inanimate objects which are not capable of any motion themselves. Our study was similar in design to that of Richardson and Matlock study [17] in the sense that the same display was used with both figurative and non figurative types of sentences having same meaning. This design offers strong evidence for the fact that the difference in number of fixations and average gaze durations are because of motion simulations and not for other factors. Increased gaze durations further demonstrate perceptual motor representation accounts of language that consider language comprehension as a dynamic act of semantic simulation. Such eye movements indicate the unconscious and automatic nature of motor resonance arising during the processing of spatial language and provide strong support for the simulation based approaches to language comprehension. This automatic activation of perceptual motor system has a direct influence on eye movement behavior and the neural basis of which we discuss below.

Figurative language processing has attracted extensive neuroimaging investigations. Studies with patients [24,25] and normal subjects [26] have shown a right hemisphere involvement. However, a careful look at the stimuli used in several such studies will show that considerable variation in what is called figurative exists. For example, most studies have focused on processing of metaphors [27], idioms [28] or proverbs [29] and have found several brain areas responsible for processing such non literal language. It has been found that in contrast to figurative sentences, action sentences activate motor areas. A recent study [30] using fMRI studied comprehension of action verbs and action sentences along with literal expressions. Their findings showed increased activity in motor areas when action verbs were presented in isolation compared to literal ones. When the same verbs were presented in idiomatic contexts, activation was found in fronto-temporal regions only. This shows the very well defined selective specialization of brain networks and their modulatory behavior to language processing. Our findings regarding simulation of motion in fictive motion sentences as well may arise from activations of such brain areas that tap motion in inanimate objects while assigning temporary agent hood. However, current behavioral findings need to be integrated with neuroimaging findings for a coherent model to emerge as far as processing of different types of figurative language in the brain is concerned.

However, very few studies have used fictive motion sentences in neuroimaging [20]. This has led to a difficulty in comparing simulation arising out of fictive motion sentences and their metaphorical counterparts. In an fMRI study of metaphor processing [31], significant involvement of left inferior frontal gyrus was found indicating semantic processing. This however blurs the traditional difference between syntactic and semantic processing. Therefore, the asymmetry between left and right hemisphere areas in processing of figurative language may indicate differential specializations of brain cortical areas for subtle variations in semantic structures of language. As far as fictive motion sentences are concerned, the primary interest lies in the abstract and illusory nature of motion that subjects simulate while comprehending such sentences. Therefore, future studies should aim to uncover the difference between processing a fictive motion sentence and a metaphorical sentence.

Another important point while dealing with language-vision interaction, as in the current study, is to account for representational issues that arise during processing. Processing of fictive motion sentences affects oculomotor behavior in an involuntary manner as is evident in the current results. The simulation of motion causes dynamic shifts of visual attention on a depicted area of the scene and we find increased gaze duration and increased number of fixations. In spite of several studies on the neurophysiological basis of saccade target selection [32] and saccade programming [33], so far very little is understood about the neuronal basis of language comprehension and visual attentional shifts during processing of scenes. The link that neuroimaging must find is between language processing and saccade target selection via attentional mechanisms. Eye movement data, as in this current study, demonstrate this precisely albeit taking a behavioral route. We argue that motion simulation in figurative language processing dynamically affects attentional mechanism that in turn leads to changes in saccadic target selection. Further studies with simultaneous eye tracking and fMRI may reveal this important network that sub-serves language-vision interaction and allows embodiment to take place during cognition in perceptual motor integration [34].

There are other important issues as well that need to be pointed out while we are viewing the results from a perspective of language and vision interaction. For example, what is the nature of interaction between language processing and scene perception in an embodied framework? And do grammatical aspects of the figurative language play any role in simulation of motion? It is possible that the noun phrase that occupies a grammatically focused position in a sentence may trigger deployment of visual attention compared to when it is not in such a position.

In the behavioral domain, recent works involving auditory sentences and scenes provide conflicting accounts of such interaction between language and visual attention during scene perception. The affordance based account [35] emphasizes the creation of a mental representation of the heard sentences that subsequently guides visual attention to those areas of a scene that afford it. However, in case of the fictive motion sentence, the meaning of it hardly affords what is in the scene i.e., a scene depicting a road through a desert does not afford its movement like an animate mobile entity. Therefore, it is very difficult to explain such simulation of motion causing shifts in visual attention using such an approach. Moreover, we know very little about the brain areas and their online interaction that allow such integration of linguistic and visual stimuli.

The other account recently proposed [36] considers involvement of memory in guiding eye movements to scenes as a function of a sentence. Recent modification of this account offers a brain perspective where important brain areas that otherwise process language like the left IFG show activations to online interaction of linguistic and visual information. Studies done on figurative language processing suggest a predominant role of right hemisphere because of the complexity in such sentences [37]. In sum, the literature from brain imaging till date does not offer a unanimous picture of the cortical activity associated with figurative language processing. Integrating neuroimaging processing models of figurative language with findings from visual cognition may reveal subtle interaction patterns between the linguistic and the non-linguistic as far as meaning generation is concerned.

The implications of the present findings for neuoimaging research on language processing poses several important questions. Current models on literal as well as figurative language processing in terms of the brain areas involved must be extended to include the online interaction of language and vision to account for such phenomena like motion simulation in sentences affecting eye movement behavior. It must be, however, noted that most findings on basic physiology of eye movement behavior has come from primate studies with little generalization to humans. Therefore, to integrate sentence comprehension, motion simulation and visual attentional shift with regards to brain cortical organization would require focusing on such areas that simulate motion, assemble meaning and guide oculomotor shifts i.e. the pre frontal eye fields, language areas and motor areas.

Based on our eye movement results with fictive motion sentences, it can be said that the findings not only provide support for the simulation based theories of language processing in an embodied framework, but as well provide strong reason to link findings from cognitive neuroscience of language processing to neurophysiology of eye movement behavior. Future studies can look into the dynamic interaction between language and visual attentional areas along with areas responsible for processing motion in a unified manner so as to account for the phenomena.

APPENDIX

List of sentences used in Hindi (In italics) with their English translations. Each sentence had a fictive motion and a non fictive motion version.

-

Yeh pul nadi ke upar se hokar guzarta hai (FM)

This bridge goes across the river.

Yeh pul nadi ke upar hai. (NFM)

This bridge is across the river. -

Yeh baada khet ke beech se hokar guzarta hai. (FM)

This fence goes through the middle of the field.

Yeh baada khetke beech main hai. (NFM)

This fence is in the middle of the field. -

Yeh sadak registaan se ho kar guzarti hai (FM)

This road goes through the desert.

Yeh sadak registaan main hai (NFM)

This road is in the desert. -

yeh imaarat asmaan tak jaati hai. (FM)

This building goes upto the sky.

Yeh imaarat oonchi hai. (NFM)

This building is very tall -

Yeh raasta pahado ke beech se hokar guzarta hai (FM).

This way goes through the mountains.

Yeh raasta pahado ke beech main hai. (NFM)

This way is between the mountains. -

Yeh jaal is kambhe se guzar kar us khambhe tak jaata hai. (FM)

The net goes from this pole to that pole.

Yeh jaal do khambho ke beech main hai. (NFM)

This net is between the two poles. -

Yeh rail ki patari gaon se hokar guzarti hai. (FM)

This railtrack goes through the village.

Yeh rail ki patri gaon main hai. (NFM)

This railtrack is in the village. -

Yeh phoolon ki kyari maidaan se hokar guzarti hai. (FM)

This row of flowers goes through the field.

Yeh phoolon ki kyaari maidaan main hai. (NFM)

This row of flowers is in the field. -

Yeh kinaara nadi ke bagal se guzarta hai. (FM)

The bank flows along with the river.

Yeh kinaara nadi ke bagal main hai. (NFM)

This bank is beside the river. -

Yeh bijli ke taar is khambhe se guzarkar us imaarta tak jaatein hai. (FM)

These electric wires go from this pole to that building.

Yeh bijli ke taar is khambhe aur imaarat ke beech main hai. (NFM)

These electric wires are between the pole nad the building. -

Yeh pagdandi jungle se ho kar guzarti hai (FM)

This road goes through the jungle.

Yeh pagdandi jungle main hai. (NFM)

This raod is in the jungle. -

Yeh patthar ki kataar samudra ke beech se hokar guzarti hai. (FM)

This row of rocks goes through the middle of the sea.

Yeh patthar ki kataar samudra ke beech main hai. (NFM)

This row of rocks is in the middle of the sea. -

Yeh pedo ki kataar nahar ke kinaare se hokar guzarti hai. (FM)

This row of trees runs along the river bank.

Yeh pedo ki kataar nahar ke kinaare hai. (NFM)

This row of trees is beside the river bank. -

Yeh pahad samudra ke kinaare se hokar guzarta hai. (FM)

This mountain runs along the sea coast.

Yeh pahad samudra ke kinaare hai. (NFM)

This mountain is beside the sea. -

Yeh dewaar ghar ke saamne se hokar guzarti hai. (FM)

This wall runs in the front of the house.

Yeh dewaar ghar ke saamne hai. (NFM)

Their wall is in the front of the house.